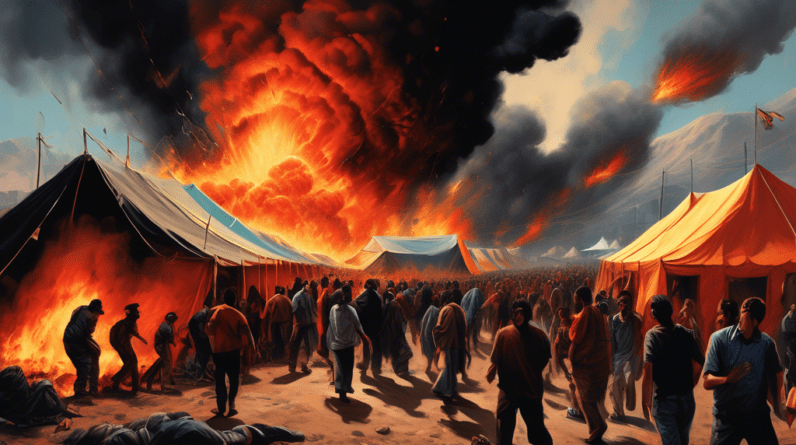

The image, which quickly went viral, has sparked debate about the ethical implications of using AI to generate images of conflict zones.

In a digital age saturated with information and misinformation, the lines between reality and fabrication are becoming increasingly blurred. This blurring is particularly concerning when it comes to depictions of conflict zones, where the stakes of misinterpretation are incredibly high. The recent emergence of a hyperrealistic, AI-generated image depicting a fiery explosion near the Rafah tent camp in Gaza has thrown this issue into sharp relief, sparking debate about the ethical considerations, potential for manipulation, and the very nature of truth in the age of artificial intelligence.

The Power and Peril of AI-Generated Imagery

The image, shared widely across social media platforms, is strikingly realistic. It portrays a massive plume of smoke billowing into the sky, flames licking at the edges of a devastated cityscape, and terrified figures fleeing the inferno. The level of detail, the raw emotion captured, and the sheer immediacy of the scene are enough to fool even the most discerning eye. This realism is precisely what makes AI-generated imagery so compelling and, simultaneously, so dangerous.

On the one hand, AI image generation tools have the potential to democratize creativity, offering anyone with an internet connection the ability to produce high-quality visuals. They can be invaluable for artists, designers, and content creators, opening up new avenues for expression and innovation. Moreover, in the context of journalism and documentary filmmaking, AI-generated imagery could be used to recreate events with greater accuracy and detail, offering a more immersive and impactful understanding of complex situations.

However, the same technology that enables these positive applications also carries significant risks. The ability to create such realistic imagery opens the door to unprecedented levels of misinformation and manipulation. In the wrong hands, AI-generated images can be used to fabricate events, spread propaganda, incite violence, and erode trust in legitimate news sources. This is particularly dangerous in the context of conflicts like the Israeli-Palestinian conflict, where tensions are already high and misinformation can have deadly consequences.

The Rafah Image: A Case Study in Ethical Concerns

The AI-generated image of the explosion near the Rafah tent camp brings these ethical dilemmas into sharp focus. While the image itself does not explicitly accuse any party of wrongdoing, its context and the timing of its release are crucial. Appearing amidst a period of heightened tensions and escalating violence, the image has the potential to inflame existing prejudices, deepen divisions, and hinder efforts towards peace.

The fact that the image is AI-generated adds another layer of complexity. Its hyperrealism makes it difficult to distinguish from actual photographs, increasing the likelihood of it being mistaken for genuine footage. This blurring of reality raises critical questions:

- Who is responsible for verifying the authenticity of images in the age of AI?

- How can we educate the public to be more discerning consumers of online content?

- What legal and ethical frameworks need to be put in place to prevent the malicious use of AI image generation?

These questions have no easy answers, but they demand urgent attention. As AI technology continues to advance, we can expect to see even more sophisticated and difficult-to-detect forms of synthetic media. This requires a collective effort from tech companies, policymakers, journalists, and the public to mitigate the risks and harness the potential of AI in a responsible and ethical manner.

Navigating the Future of Truth and Technology

The emergence of AI-generated imagery marks a significant turning point in how we consume and interpret information. It presents both a challenge and an opportunity. The challenge lies in navigating this new landscape responsibly, developing critical media literacy skills, and demanding greater transparency and accountability from those who create and distribute digital content.

The opportunity lies in leveraging the power of AI for good. By using AI responsibly, we can enhance storytelling, foster empathy, and promote a more nuanced understanding of the world around us. However, this requires a collective commitment to truth, a willingness to engage in critical thinking, and a recognition that the fight against misinformation is not just a technological one, but a human one as well.

Beyond the Spectacle: The Human Cost of Conflict

While the debate over AI-generated imagery rages on, it’s crucial to remember that behind every image of conflict, real lives are at stake. The conflict in Gaza is not a spectacle to be fabricated or manipulated for online engagement. It’s a humanitarian crisis with devastating consequences for millions of people.

Instead of getting caught up in the cycle of misinformation, it is our responsibility to seek out credible sources of information, engage in respectful dialogue, and advocate for peaceful resolutions. We must remember that technology, including AI, is a tool. It can be used for good or ill, and the choice ultimately lies with us.