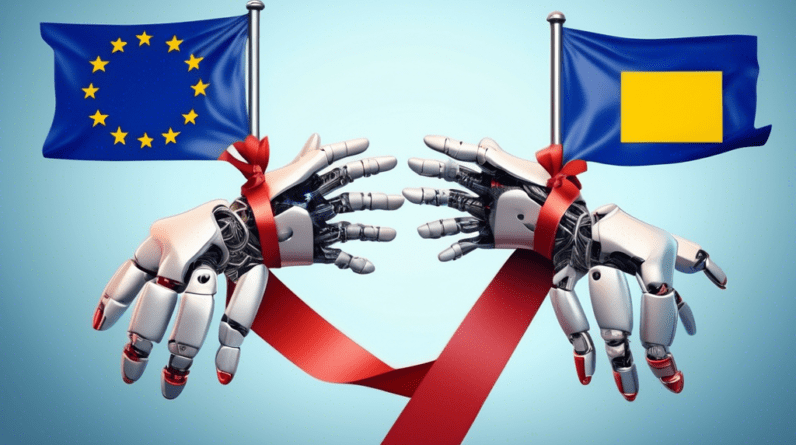

In a move that has sent ripples through the tech world, Meta, the parent company of Facebook and Instagram, has announced that its highly anticipated AI assistant will not be released in the European Union. The company has cited concerns over complying with the bloc’s stringent data privacy regulations, specifically the EU’s Artificial Intelligence Act, as the primary reason behind this decision. This unexpected turn of events has ignited a debate about the balance between fostering innovation and safeguarding user privacy in the age of artificial intelligence.

The EU’s Artificial Intelligence Act: A Roadblock to Innovation?

The EU’s Artificial Intelligence Act, currently in its final stages of negotiation, aims to establish a comprehensive legal framework for AI systems across the bloc. The legislation categorizes AI applications into four risk levels: unacceptable, high, limited, and minimal. AI systems classified as high-risk – such as those used in critical infrastructure, law enforcement, and employment – face stricter requirements, including mandatory risk assessments, data quality control, and human oversight.

Meta argues that the broad scope of the AI Act, particularly its definition of high-risk AI, creates uncertainty and hinders its ability to deploy its AI assistant in the EU. The company claims that the regulations’ requirements for data transparency, algorithmic accountability, and human oversight would necessitate significant modifications to its AI system, potentially delaying its release by months or even years.

Meta’s Perspective: Striking a Balance Between Innovation and Regulation

Meta maintains that it is committed to user privacy and supports the development of responsible AI regulations. However, the company believes that the EU’s current approach stifles innovation and places European businesses at a competitive disadvantage. It argues that a more flexible regulatory framework, one that allows for innovation while ensuring user protection, is crucial for fostering the growth of the AI industry in Europe.

The company emphasizes that its AI assistant has been designed with privacy in mind, incorporating features such as data minimization, on-device processing, and user controls. Meta believes that these measures align with the spirit of the EU’s data protection laws and demonstrate its commitment to responsible AI development.

The EU’s Stance: Prioritizing User Privacy and Ethical AI

The European Commission, the EU’s executive branch, has defended its stance on AI regulation, emphasizing that the bloc prioritizes user privacy and ethical AI development. It argues that the AI Act is essential to ensure that AI systems are developed and deployed responsibly, mitigating potential risks to fundamental rights and freedoms.

EU officials have stated that the AI Act is not intended to stifle innovation but rather to foster trust in AI technology. They emphasize that the legislation provides clear guidelines for developers and encourages the development of AI systems that benefit society as a whole. The Commission has also stressed that it is open to dialogue with companies like Meta to address specific concerns and find workable solutions.

The Implications: A Transatlantic Divide in AI Regulation?

Meta’s decision to withhold its AI assistant from the EU highlights the growing divergence in AI regulation between the EU and the United States. While the EU has taken a more proactive approach, enacting comprehensive legislation to govern AI, the US has adopted a more hands-off approach, relying primarily on industry self-regulation.

This transatlantic divide in AI regulation has raised concerns about fragmentation and the potential for a race to the bottom, where companies gravitate towards jurisdictions with less stringent rules. It also poses challenges for businesses operating globally, which must navigate a complex patchwork of regulations.

The Future of AI in Europe: Finding Common Ground

The debate surrounding Meta’s decision underscores the need for ongoing dialogue and collaboration between policymakers, industry leaders, and civil society to shape the future of AI regulation in Europe. Striking a balance between fostering innovation and safeguarding fundamental rights is crucial for ensuring that AI technologies benefit society as a whole.

A potential path forward involves exploring more flexible regulatory frameworks, such as sandboxes and pilot projects, that allow companies to test and refine AI systems in a controlled environment while adhering to ethical guidelines. Additionally, fostering closer collaboration between the EU and the US on AI regulation can help prevent fragmentation and promote the development of harmonized standards.

Conclusion

Meta’s decision to withhold its AI assistant from the EU due to regulatory concerns highlights the complex challenges of balancing innovation with ethical considerations in the age of AI. As AI technologies become increasingly integrated into our lives, finding a path forward that fosters both innovation and user trust is paramount. This will require ongoing dialogue, collaboration, and a willingness to adapt regulations to the ever-evolving landscape of artificial intelligence.